Helper Script

Contents

Helper Script#

!pip install nilearn --quiet

import os

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

Here is a collection of Global Constants used in the code:

N_SUBJECTS = Number of subjects included

intN_PARCELS = The number of regions of interest

intTR = Time resolution, in seconds

floatHEMIS = The parcels (region of interests) are matched across hemisphere with the same order

[list of strings]N_RUNS = Number of times the experiment was performed on each subject

intRUNS = Name tags for the runs

[list of strings]EXPERIMENTS = Contains task names as keys and task conditions as value

dict of dict

N_SUBJECTS = 100

N_PARCELS = 360

TR = 0.72

HEMIS = ["Right", "Left"]

RUNS = ['LR','RL']

N_RUNS = 2

EXPERIMENTS = {

'EMOTION' : {'cond':['fear','neut']},

'GAMBLING' : {'cond':['loss','win']},

'LANGUAGE' : {'cond':['math','story']},

'SOCIAL' : {'cond':['mental','rnd']}

}

HCP_DIR = r'/Users/rajdeep_ch/Documents/nma/project/hcp_task'

subjects = list(np.loadtxt(os.path.join(HCP_DIR,'subjects_list.txt'), dtype='str'))

Here is the list of 100 subject ids

subjects

['100307',

'100408',

'101915',

'102816',

'103414',

'103515',

'103818',

'105115',

'105216',

'106016',

'106319',

'110411',

'111009',

'111312',

'111514',

'111716',

'113215',

'113619',

'114924',

'115320',

'117122',

'117324',

'118730',

'118932',

'119833',

'120111',

'120212',

'122317',

'123117',

'124422',

'125525',

'126325',

'127933',

'128632',

'129028',

'130013',

'130316',

'130922',

'131924',

'133625',

'133827',

'133928',

'134324',

'135932',

'136833',

'137128',

'138231',

'138534',

'139637',

'140824',

'142828',

'143325',

'148032',

'148335',

'149337',

'149539',

'150524',

'151223',

'151526',

'151627',

'153025',

'153429',

'154431',

'156233',

'156637',

'157336',

'158035',

'158540',

'159239',

'159340',

'160123',

'161731',

'162329',

'162733',

'163129',

'163432',

'167743',

'172332',

'175439',

'176542',

'178950',

'182739',

'185139',

'188347',

'189450',

'190031',

'192439',

'192540',

'193239',

'194140',

'196144',

'196750',

'197550',

'198451',

'199150',

'199655',

'200614',

'201111',

'201414',

'205119']

task_list = list(EXPERIMENTS.keys())

Helper 1 - Loading Time Series for single subject#

def load_single_timeseries(subject, experiment, run, remove_mean=True):

"""Load timeseries data for a single subject and single run.

Args:

subject (str): subject ID to load

experiment (str): Name of experiment

run (int): (0 or 1)

remove_mean (bool): If True, subtract the parcel-wise mean (typically the mean BOLD signal is not of interest)

Returns

ts (n_parcel x n_timepoint array): Array of BOLD data values

"""

RUNS = {0: 'LR', 1: 'RL'}

bold_run = RUNS[run]

bold_path = f"{HCP_DIR}/subjects/{subject}/{experiment}/tfMRI_{experiment}_{bold_run}"

bold_file = "data.npy"

ts = np.load(f"{bold_path}/{bold_file}")

if remove_mean:

ts -= ts.mean(axis=1, keepdims=True)

return ts

Helper 2 - Loading evs#

def load_evs(subject, experiment, run):

"""Load EVs (explanatory variables) data for one task experiment.

Args:

subject (str): subject ID to load

experiment (str) : Name of experiment

run (int): 0 or 1

Returns

evs (list of lists): A list of frames associated with each condition

"""

frames_list = []

RUNS = {0: 'LR', 1: 'RL'}

TR = 0.72

task_key = f'tfMRI_{experiment}_{RUNS[run]}'

EXPERIMENTS = {

'MOTOR' : {'cond':['lf','rf','lh','rh','t','cue']},

'WM' : {'cond':['0bk_body','0bk_faces','0bk_places','0bk_tools','2bk_body','2bk_faces','2bk_places','2bk_tools']},

'EMOTION' : {'cond':['fear','neut']},

'GAMBLING' : {'cond':['loss','win']},

'LANGUAGE' : {'cond':['math','story']},

'RELATIONAL' : {'cond':['match','relation']},

'SOCIAL' : {'cond':['mental','rnd']}

}

for cond in EXPERIMENTS[experiment]['cond']:

ev_file = f"{HCP_DIR}/subjects/{subject}/{experiment}/{task_key}/EVs/{cond}.txt"

ev_array = np.loadtxt(ev_file, ndmin=2, unpack=True)

ev = dict(zip(["onset", "duration", "amplitude"], ev_array))

#print(ev)

# Determine when trial starts, rounded down

start = np.floor(ev["onset"] / TR).astype(int)

#print(start)

# Use trial duration to determine how many frames to include for trial

duration = np.ceil(ev["duration"]/TR).astype(int)

#print("Start: {} | Duration: {}".format(start,duration))

# Take the range of frames that correspond to this specific trial

frames = [s + np.arange(0, d) for s, d in zip(start, duration)]

frames_list.append(frames)

# frames_list is returning a list of time duration

# ev is returning the explanatory variables

return frames_list

Helper 3 - Average Frames#

CURRENTLY NOT WORKING#

def average_frames(timeseries_data, evs_data, experiment_name, task_cond):

"""

The function takes in the brain activity measures and behavioural measures for a particular subject in a given task condition.

The function returns the brain activity average across all trail points.

The corresponding average contains the time-average brain activity across all ROIs

Input:

timeseries_data (ndarray): Contains the timeseries data for a single subject in a given experiment

evs_data (ndarray): Contains the evs data for the single subject

experiment_name (str): Name of the experiment (EMOTION/SOCIAL)

task_condition (str): string name for task condition (fear/neut)

Returns:

average_values (ndarray): time-average activation across all ROIs

shape = (ROIs,1)

"""

idx = EXPERIMENTS[experiment_name]['cond'].index(task_cond)

print('IDX: {}'.format(idx))

print('Here is evs[idx][-1][-1]: {}'.format(evs_data[idx][-1][-1]),end="\n")

print("Length of timepoints: {}".format(timeseries_data.shape[2]))

#if evs_data[idx][-1][-1] > timeseries_data.shape[2]:

#print("Conditional works!")

#average_values = np.mean(np.concatenate([np.mean(timeseries_data[:,evs_data[idx][i]],axis=1,keepdims=True) for i in range(len(evs_data[idx])-1)], axis = -1), axis = 1)

#else:

#print("No need for conditional to work")

#average_values = np.mean(np.concatenate([np.mean(timeseries_data[:,evs_data[idx][i]],axis=1,keepdims=True) for i in range(len(evs_data[idx]))], axis = -1), axis = 1)

#return average_values

average_frames(emotion_timeseries[0],emotion_evs[0],'EMOTION','neut')

IDX: 1

Here is evs[idx][-1][-1]: 156

---------------------------------------------------------------------------

IndexError Traceback (most recent call last)

/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb Cell 16 in <cell line: 1>()

----> <a href='vscode-notebook-cell:/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb#X22sZmlsZQ%3D%3D?line=0'>1</a> average_frames(emotion_timeseries[0],emotion_evs[0],'EMOTION','neut')

/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb Cell 16 in average_frames(timeseries_data, evs_data, experiment_name, task_cond)

<a href='vscode-notebook-cell:/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb#X22sZmlsZQ%3D%3D?line=20'>21</a> print('IDX: {}'.format(idx))

<a href='vscode-notebook-cell:/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb#X22sZmlsZQ%3D%3D?line=22'>23</a> print('Here is evs[idx][-1][-1]: {}'.format(evs_data[idx][-1][-1]),end="\n")

---> <a href='vscode-notebook-cell:/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb#X22sZmlsZQ%3D%3D?line=23'>24</a> print("Length of timepoints: {}".format(timeseries_data.shape[2]))

IndexError: tuple index out of range

Function 1 - load_all_timeseries()#

Loads the timeseries for all subjects

def load_all_timeseries(subject_list, experiment_name, run_num):

"""

Reads the timeseries data of all subjects contained in the subject list for a given experiment

and given run.

Inputs:

subject_list (list): List containing subject ids

experiment_name (str): Name of task under consideration

run_num (int): Number of run task

Return:

data_array (ndarray): timeseries data of all subjects

shape of data returned : (100,360,timepoints)

"""

data_list = []

for sub_id in subject_list:

sub_data = load_single_timeseries(sub_id,experiment_name,run_num)

data_list.append(sub_data)

data_array = np.array(data_list)

return data_array

loading task data for emotion, social, gambling data for all subjects in the first run

# currently taking data for run 1 (LR)

emotion_timeseries = load_all_timeseries(subjects,'EMOTION',0)

social_timeseries = load_all_timeseries(subjects,'SOCIAL',0)

gambling_timeseries = load_all_timeseries(subjects,'GAMBLING',0)

Function 2 - load_all_evs()#

Loads the evs data for all subjects across all task conditions.

def load_all_evs(subject_list,experiment_name,run_num):

"""

Reads the evs data for all subjects in subject list across all task conditions

Inputs:

subject_list (list): List containing subject ids

experiment_name (str): Name of task under consideration

run_num (int): Number of run task

Returns:

data_array (ndarray): explanatory variables (ev) for all subjects

"""

data_list = []

for sub_id in subject_list:

sub_evs = load_evs(sub_id,experiment_name,run_num)

data_list.append(sub_evs)

data_array = np.array(data_list)

return data_array

#return data_list

# load_all_evs() returns a list of explanatory variables data

emotion_evs = load_all_evs(subjects,'EMOTION',0)

#social_evs = load_all_evs(subjects,'SOCIAL',0)

gambling_evs = load_all_evs(subjects,'GAMBLING',0)

emotion_evs = load_all_evs(subjects,'EMOTION',0)

emotion_evs.shape

(100, 2, 3, 25)

Function 3 - all_average_frames()#

The function loads in the average timeseries for all subjects for a given experiement’s task condition.

def all_average_frames(subject_list, timeseries_data, evs_data, experiment_name, task_cond):

"""

The function takes in the brain activity measures and behavioural measures for all subjects in a given task condition.

The function returns the brain activity average across all trail points.

The corresponding average contains the time-average brain activity across all ROIs

Input:

timeseries_data (ndarray): Contains the timeseries data for all subjects in a given experiment

evs_data (ndarray): Contains the evs data for all subject

experiment_name (str): Name of the experiment (EMOTION/SOCIAL)

task_condition (str): string name for task condition (fear/neut)

Returns:

average_values (ndarray): time-average activation across all ROIs

shape = (number of subjects, ROIs , 1)

"""

data_array = np.zeros((len(subject_list),360))

for sub_num in range(len(subject_list)):

sub_average = average_frames(timeseries_data[sub_num], evs_data[sub_num], experiment_name, task_cond)

data_array[sub_num] = sub_average

#np.append(data_array,sub_average)

return data_array

print("Shape of Emotion EVS: {}".format(np.array(emotion_evs).shape))

print("Shape of Social EVS: {}".format(np.array(social_evs).shape))

print("Shape of Gambling EVS: {}".format(np.array(gambling_evs).shape))

Shape of Emotion EVS: (100, 2, 3, 25)

Shape of Social EVS: (100, 2)

Shape of Gambling EVS: (100, 2, 2, 39)

bug 1 - adding conditional for load_evs() to ensure the duration_times line up timeseries_timepoints

currently average_frames() does not work.

remove the buggy conditional

def average_frames(timeseries_data, evs_data, experiment_name, task_cond):

"""

The function takes in the timeseries data and evs data for a particular subject and a given task condition

for a particular experiment

"""

idx = EXPERIMENTS[experiment_name]['cond'].index(task_cond)

#print('IDX: {}'.format(idx))

#print('Here is evs[idx][-1][-1]: {}'.format(evs_data[idx][-1][-1]),end="\n")

#print("Length of timepoints: {}".format(timeseries_data.shape[2]))

#if evs_data[idx][-1][-1] > timeseries_data.shape[2]:

#print("Conditional works!")

#average_values = np.mean(np.concatenate([np.mean(timeseries_data[:,evs_data[idx][i]],axis=1,keepdims=True) for i in range(len(evs_data[idx])-1)], axis = -1), axis = 1)

#else:

#print("No need for conditional to work")

average_values = np.mean(np.concatenate([np.mean(timeseries_data[:,evs_data[idx][i]],axis=1,keepdims=True) for i in range(len(evs_data[idx]))], axis = -1), axis = 1)

return average_values

def load_all_task_score(subject_list, experiment_name, run_num):

"""

Load task performance statistics for one task experiment.

Args:

subject_list (str): subject IDs to load

experiment_name (str) : Name of experiment

run_num (int): 0 or 1

Returns

task_scores (list of lists): A list of task performance scores for all subjects in a particular run

"""

task_scores = []

RUNS = {0: 'LR', 1: 'RL'}

#TR = 0.72

task_key = f'tfMRI_{experiment_name}_{RUNS[run_num]}'

EXPERIMENTS = {

'EMOTION' : {'cond':['fear','neut']},

'GAMBLING' : {'cond':['loss','win']},

'SOCIAL' : {'cond':['mental','rnd']}

}

for sub_id in subject_list:

#ev_file = f"{HCP_DIR}/subjects/{subject}/{experiment}/{task_key}/EVs/{cond}.txt"

task_file_loc = os.path.join(HCP_DIR,'subjects/{}/{}/{}/EVs/Stats.txt'.format(sub_id,experiment_name,task_key))

#ev_array = np.loadtxt(ev_file, ndmin=2, unpack=True)

#ev = dict(zip(["onset", "duration", "amplitude"], ev_array))

sub_task_score = get_task_data(task_file_loc)

task_scores.append(sub_task_score)

return task_scores

def get_task_data(taskfile_loc):

"""

Loads the task data from file location and returns a tuple containing column name

and data

Input:

taskfile (TextIOWrapper) - file location for performance statistics

Return:

task_score = (column name, task score)

"""

subject_all_score = []

with open(taskfile_loc,'r') as taskfile:

for line in taskfile:

data = line.strip().split(':')

string_data = data[0]

numeric_data = float(data[1])

output_data = ((string_data,numeric_data))

subject_all_score.append(output_data)

taskfile.close()

return subject_all_score

emotion_ts = load_all_task(subjects[:25],'EMOTION',0)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb Cell 36 in <cell line: 1>()

----> <a href='vscode-notebook-cell:/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb#X51sZmlsZQ%3D%3D?line=0'>1</a> emotion_ts = load_all_task(subjects[:25],'EMOTION',0)

NameError: name 'load_all_task' is not defined

emotion_ts

[[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 623.8),

('Median Shape RT', 660.588235294)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 670.866666667),

('Median Shape RT', 711.944444444)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 834.533333333),

('Median Shape RT', 864.647058824)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 661.2),

('Median Shape RT', 711.111111111)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 856.133333333),

('Median Shape RT', 784.722222222)],

[('Face Accuracy', 0.933333333333),

('Shape Accuracy', 1.0),

('Median Face RT', 759.285714286),

('Median Shape RT', 771.722222222)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 797.066666667),

('Median Shape RT', 917.555555556)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 717.0),

('Median Shape RT', 755.944444444)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.888888888889),

('Median Face RT', 700.533333333),

('Median Shape RT', 591.0625)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 723.333333333),

('Median Shape RT', 785.5)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 812.933333333),

('Median Shape RT', 722.882352941)],

[('Face Accuracy', 0.866666666667),

('Shape Accuracy', 1.0),

('Median Face RT', 910.461538462),

('Median Shape RT', 825.444444444)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 816.8),

('Median Shape RT', 698.777777778)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 699.933333333),

('Median Shape RT', 789.235294118)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 773.133333333),

('Median Shape RT', 738.222222222)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 865.866666667),

('Median Shape RT', 786.833333333)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 523.266666667),

('Median Shape RT', 555.0)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 951.666666667),

('Median Shape RT', 901.722222222)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 787.2),

('Median Shape RT', 738.722222222)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 897.4),

('Median Shape RT', 840.0)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 886.533333333),

('Median Shape RT', 797.888888889)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 943.2),

('Median Shape RT', 861.611111111)],

[('Face Accuracy', 0.933333333333),

('Shape Accuracy', 1.0),

('Median Face RT', 778.428571429),

('Median Shape RT', 777.888888889)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 652.466666667),

('Median Shape RT', 710.555555556)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.888888888889),

('Median Face RT', 745.6),

('Median Shape RT', 909.0625)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 872.133333333),

('Median Shape RT', 751.833333333)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 696.0),

('Median Shape RT', 751.666666667)],

[('Face Accuracy', 0.933333333333),

('Shape Accuracy', 1.0),

('Median Face RT', 1016.78571429),

('Median Shape RT', 1088.66666667)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 921.733333333),

('Median Shape RT', 719.333333333)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 577.8),

('Median Shape RT', 819.5)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 904.733333333),

('Median Shape RT', 873.5)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 614.533333333),

('Median Shape RT', 609.0)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 847.066666667),

('Median Shape RT', 793.764705882)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 660.266666667),

('Median Shape RT', 641.777777778)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 894.066666667),

('Median Shape RT', 934.111111111)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 886.666666667),

('Median Shape RT', 895.722222222)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 551.8),

('Median Shape RT', 668.647058824)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 612.333333333),

('Median Shape RT', 546.0)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 625.6),

('Median Shape RT', 749.722222222)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 895.6),

('Median Shape RT', 908.333333333)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 889.466666667),

('Median Shape RT', 855.823529412)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 817.266666667),

('Median Shape RT', 838.611111111)],

[('Face Accuracy', 0.933333333333),

('Shape Accuracy', 0.888888888889),

('Median Face RT', 864.928571429),

('Median Shape RT', 800.3125)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 1101.33333333),

('Median Shape RT', 958.833333333)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 769.533333333),

('Median Shape RT', 860.388888889)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 711.333333333),

('Median Shape RT', 728.888888889)],

[('Face Accuracy', 0.933333333333),

('Shape Accuracy', 1.0),

('Median Face RT', 816.142857143),

('Median Shape RT', 877.944444444)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 743.8),

('Median Shape RT', 710.235294118)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 725.466666667),

('Median Shape RT', 794.722222222)],

[('Face Accuracy', 0.8),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 860.25),

('Median Shape RT', 825.176470588)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 746.933333333),

('Median Shape RT', 700.888888889)],

[('Face Accuracy', 0.866666666667),

('Shape Accuracy', 1.0),

('Median Face RT', 913.538461538),

('Median Shape RT', 995.666666667)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 663.266666667),

('Median Shape RT', 741.882352941)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 795.933333333),

('Median Shape RT', 756.666666667)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 747.2),

('Median Shape RT', 701.388888889)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 573.466666667),

('Median Shape RT', 558.176470588)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 837.8),

('Median Shape RT', 893.5)],

[('Face Accuracy', 0.933333333333),

('Shape Accuracy', 1.0),

('Median Face RT', 743.428571429),

('Median Shape RT', 695.0)],

[('Face Accuracy', 0.933333333333),

('Shape Accuracy', 1.0),

('Median Face RT', 1003.57142857),

('Median Shape RT', 760.944444444)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 603.866666667),

('Median Shape RT', 678.277777778)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 681.733333333),

('Median Shape RT', 600.444444444)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 689.133333333),

('Median Shape RT', 630.611111111)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 747.466666667),

('Median Shape RT', 802.5)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 872.8),

('Median Shape RT', 917.555555556)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 1014.26666667),

('Median Shape RT', 878.588235294)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 775.2),

('Median Shape RT', 713.055555556)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 692.866666667),

('Median Shape RT', 793.176470588)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 982.6),

('Median Shape RT', 970.5)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.833333333333),

('Median Face RT', 824.6),

('Median Shape RT', 875.266666667)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 890.066666667),

('Median Shape RT', 1022.22222222)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 829.066666667),

('Median Shape RT', 798.555555556)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 642.866666667),

('Median Shape RT', 673.0)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 908.666666667),

('Median Shape RT', 932.222222222)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 857.0),

('Median Shape RT', 804.444444444)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 576.533333333),

('Median Shape RT', 594.055555556)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 624.533333333),

('Median Shape RT', 685.277777778)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 789.4),

('Median Shape RT', 824.055555556)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 856.333333333),

('Median Shape RT', 953.833333333)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 1012.86666667),

('Median Shape RT', 936.277777778)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 699.6),

('Median Shape RT', 740.411764706)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 1072.33333333),

('Median Shape RT', 779.222222222)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 849.2),

('Median Shape RT', 815.5)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 728.2),

('Median Shape RT', 716.117647059)],

[('Face Accuracy', 0.933333333333),

('Shape Accuracy', 1.0),

('Median Face RT', 732.142857143),

('Median Shape RT', 675.111111111)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 868.6),

('Median Shape RT', 797.888888889)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 666.733333333),

('Median Shape RT', 777.722222222)],

[('Face Accuracy', 0.866666666667),

('Shape Accuracy', 1.0),

('Median Face RT', 1074.23076923),

('Median Shape RT', 876.555555556)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 948.333333333),

('Median Shape RT', 979.555555556)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 840.133333333),

('Median Shape RT', 911.444444444)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 634.4),

('Median Shape RT', 694.888888889)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 822.666666667),

('Median Shape RT', 783.333333333)],

[('Face Accuracy', 0.933333333333),

('Shape Accuracy', 1.0),

('Median Face RT', 670.142857143),

('Median Shape RT', 659.777777778)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 749.0),

('Median Shape RT', 709.055555556)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 747.6),

('Median Shape RT', 729.647058824)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 738.6),

('Median Shape RT', 806.166666667)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 666.0),

('Median Shape RT', 668.941176471)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 835.933333333),

('Median Shape RT', 793.277777778)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 928.133333333),

('Median Shape RT', 1104.05555556)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 1.0),

('Median Face RT', 804.8),

('Median Shape RT', 806.166666667)],

[('Face Accuracy', 1.0),

('Shape Accuracy', 0.944444444444),

('Median Face RT', 741.4),

('Median Shape RT', 804.294117647)]]

social_ts = load_all_task(subjects[:5],'SOCIAL',0)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb Cell 38 in <cell line: 1>()

----> <a href='vscode-notebook-cell:/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb#X53sZmlsZQ%3D%3D?line=0'>1</a> social_ts = load_all_task(subjects[:5],'SOCIAL',0)

NameError: name 'load_all_task' is not defined

social_ts[0]

[('Mean RT Mental', 479.0),

('Mean RT Random', 575.0),

('ACC Mental', 1.0),

('ACC Random', 1.0),

('Percent Mental', 0.6),

('Percent Random', 0.4),

('Percent Unsure', 0.0),

('Percent No Response', 0.0)]

task_score_col = ['Face Accuracy','Shape Accuracy', 'Median Face RT','Median Shape RT']

df1 = pd.DataFrame(columns=task_score_col)

df1.head()

| Face Accuracy | Shape Accuracy | Median Face RT | Median Shape RT |

|---|

HCP_DIR

'/Users/rajdeep_ch/Documents/nma/project/hcp_task'

taskfile_loc = os.path.join(HCP_DIR,'subjects/159340/EMOTION/tfMRI_EMOTION_LR/EVs/Stats.txt')

taskfile_loc

'/Users/rajdeep_ch/Documents/nma/project/hcp_task/subjects/159340/EMOTION/tfMRI_EMOTION_LR/EVs/Stats.txt'

taskfile = open(taskfile_loc,"r")

#print(taskfile.read())

type(taskfile)

_io.TextIOWrapper

test_list = []

with open(taskfile_loc,'r') as taskfile:

for line in taskfile:

data = line.strip().split(':')

print(data)

string_data = data[0]

numbers = float(data[1])

print(float(data[1]))

print(numbers)

test_list.append((string_data,numbers))

['Face Accuracy', ' 1.0']

1.0

1.0

['Shape Accuracy', ' 1.0']

1.0

1.0

['Median Face RT', ' 890.066666667']

890.066666667

890.066666667

['Median Shape RT', ' 1022.22222222']

1022.22222222

1022.22222222

df = pd.DataFrame(np.array(test_list).T)

df.head()

| 0 | 1 | 2 | 3 | |

|---|---|---|---|---|

| 0 | Face Accuracy | Shape Accuracy | Median Face RT | Median Shape RT |

| 1 | 1.0 | 1.0 | 890.066666667 | 1022.22222222 |

df.columns.name = None

df.columns = list(df.iloc[0,:])

df.drop(0)

| Face Accuracy | Shape Accuracy | Median Face RT | Median Shape RT | |

|---|---|---|---|---|

| 1 | 1.0 | 1.0 | 890.066666667 | 1022.22222222 |

Working with regions file#

def get_task_contrast(subject_list,experiment_name,run_num):

# load in the timeseries for the subject

# load in the evs for the subject

# use the average frames function to get the needed data

task_timeseries = load_all_timeseries(subject_list,experiment_name,run_num)

task_evs = load_all_evs(subject_list,experiment_name,run_num)

task_average = all_average_frames(subject_list,experiment_name,run_num)

regions_loc = os.path.join(HCP_DIR,'regions.npy')

regions = np.load(regions_loc).T

region_info = dict(Name=list(regions[0]), Map= list(regions[1]), Hemi = ['Right'] * int(N_PARCELS/2) + ['Left'] * int(N_PARCELS/2))

region_df = pd.DataFrame(region_info)

region_df.head()

| Name | Map | Hemi | |

|---|---|---|---|

| 0 | R_V1 | Visual1 | Right |

| 1 | R_MST | Visual2 | Right |

| 2 | R_V6 | Visual2 | Right |

| 3 | R_V2 | Visual2 | Right |

| 4 | R_V3 | Visual2 | Right |

#SOCIAL

my_exp = 'SOCIAL'

#calculate group contrast SOCIAL

group_contrast_social = 0

# for a particular subject in the list of subjects

for s in subjects:

# for a particular run of the selected subject

# the loop collects timeseries and evs data for all runs

# it calculates the constrast across the task conditions in each run and adds it to the group's data

for r in [0, 1]:

data = load_single_timeseries(subject=s, experiment=my_exp,

run=r, remove_mean=True)

evs = load_evs(subject=s, experiment=my_exp,run=r)

mental_activity = average_frames(data, evs, my_exp, 'mental')

rnd_activity = average_frames(data, evs, my_exp, 'rnd')

contrast = mental_activity - rnd_activity

group_contrast_social += contrast

group_contrast_social /= (len(subjects)*2) # remember: 2 sessions per subject

#Plot contrast bar graph SOCIAL

df = pd.DataFrame({'contrast' : group_contrast_social,

'network' : region_info['Map'],

'hemi' : region_info['Hemi']

})

# we will plot the fear foot minus neutral foot contrast so we only need one plot

#plt.figure()

#sns.barplot(y='network', x='contrast', data=df, hue='hemi')

#plt.title('group contrast of mental vs random')

#plt.show()

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

No need for conditional to work

short_df = df.iloc[:25,0:2]

short_df

| contrast | network | |

|---|---|---|

| 0 | 0.362457 | Visual1 |

| 1 | 6.231128 | Visual2 |

| 2 | -9.718446 | Visual2 |

| 3 | -10.336252 | Visual2 |

| 4 | 5.514508 | Visual2 |

| 5 | 10.860714 | Visual2 |

| 6 | 10.315644 | Visual2 |

| 7 | -4.458975 | Somatomotor |

| 8 | -1.319525 | Somatomotor |

| 9 | 3.766011 | Cingulo-Oper |

| 10 | 19.709574 | Language |

| 11 | 12.801832 | Default |

| 12 | -20.018026 | Visual2 |

| 13 | -3.090936 | Frontopariet |

| 14 | -7.402316 | Frontopariet |

| 15 | -2.314018 | Visual2 |

| 16 | 18.293229 | Visual2 |

| 17 | 24.647216 | Visual2 |

| 18 | 4.595847 | Visual2 |

| 19 | 20.287001 | Visual2 |

| 20 | 20.595323 | Visual2 |

| 21 | 18.707127 | Visual2 |

| 22 | -1.171799 | Visual2 |

| 23 | -5.061730 | Auditory |

| 24 | 11.030532 | Default |

gambling_average1[0][0] - gambling_average2[0][0]

8.964454038151064

Creating the task data and personality dataset#

HCP_DIR

'/Users/rajdeep_ch/Documents/nma/project/hcp_task'

personlity_file = r'/Users/rajdeep_ch/Documents/nma/project/personality_data.csv'

personality_df = pd.read_csv(personlity_file)

personality_df = personality_df.iloc[:,0:6]

emotion_ts = load_all_task_score(subjects[:5],'EMOTION',0)

social_ts = load_all_task_score(subjects,'SOCIAL',0)

gambling_ts = load_all_task_score(subjects,'GAMBLING',0)

emotion_ts = load_all_task_score(subjects,'EMOTION',0)

emotion_face_score = np.zeros(len(emotion_ts))

emotion_shape_score = np.zeros(len(emotion_ts))

# testing for accuracy == 0

emotion_acc_val1 = np.zeros(len(emotion_ts))

emotion_acc_val2 = np.zeros(len(emotion_ts))

# loop running over all participants

for i in range(len(emotion_ts)):

sub_task_vals = emotion_ts[i]

#print(sub_task_vals[0],sub_task_vals[2])

#print(sub_task_vals[1],sub_task_vals[3][1])

face_acc, face_time = sub_task_vals[0][1], sub_task_vals[2][1]

# test

emotion_acc_val1[i] = face_acc

shape_acc, shape_time = sub_task_vals[1][1], sub_task_vals[3][1]

# test

emotion_acc_val2[i] = shape_acc

#print("Score for shape: {}".format(shape_time,shape_acc),end="\n")

#print("Score for face: {}".format(face_time / face_acc))

#emotion_face_score[i] = (face_time / face_acc) / 100

# (100 / 100) does not produce needed result

# need to convert the value to percentage

# use multi-nomial LR

#emotion_shape_score[i] = (shape_time/ shape_acc) / 100

social_ts = load_all_task_score(subjects,'SOCIAL',0)

social_mental_score = np.zeros(len(social_ts))

social_random_score = np.zeros(len(social_ts))

# test arrays for checking social's accuracy == 0 ?

social_acc_val1 = np.zeros(len(social_ts))

social_acc_val2 = np.zeros(len(social_ts))

#dict_check = {}

subject_id_list_to_remove = []

for k in range(len(social_ts)):

#if social_ts[k][3][1] not in dict_check:

#dict_check[social_ts[k][3][1]] = 1

#else:

#dict_check[social_ts[k][3][1]] += 1

#print(social_ts[k][3])

if (social_ts[k][3][1]) == 0.0:

subject_id_list_to_remove.append(subjects[k])

subject_id_list_to_remove

['133827', '192439']

social_ts[0]

[('Mean RT Mental', 479.0),

('Mean RT Random', 575.0),

('ACC Mental', 1.0),

('ACC Random', 1.0),

('Percent Mental', 0.6),

('Percent Random', 0.4),

('Percent Unsure', 0.0),

('Percent No Response', 0.0)]

for i in range(len(social_ts)):

sub_social_score = social_ts[i]

#print(sub_social_score)

# mental reaction time; mental accuracy

#print(sub_social_score[0],sub_social_score[2])

# random reaction time; random accracy

#print(sub_social_score[1],sub_social_score[3],end="\n")

mental_rt, mental_acc = sub_social_score[0][1], sub_social_score[2][1]

# test for mental_acc

social_acc_val1[i] = mental_acc

random_rt, random_acc = sub_social_score[1][1], sub_social_score[3][1]

# test for random_acc

social_acc_val2[i] = random_acc

# zero division error since mental_acc == 0

social_mental_score[i] = mental_rt / mental_acc

#social_random_score[i] = random_rt / random_acc

#print('MENTAL: {}'.format(mental_rt / mental_acc))

#print("RANDOM: {}".format(random_rt / random_acc),end="\n\n")

social_acc_val2

array([1. , 1. , 1. , 1. , 1. , 0.5, 0.5, 0.5, 1. , 1. , 0.5, 1. , 1. ,

0.5, 1. , 1. , 0.5, 1. , 1. , 1. , 0.5, 1. , 1. , 1. , 0.5, 1. ,

1. , 1. , 1. , 0.5, 0.5, 1. , 0.5, 1. , 1. , 1. , 1. , 1. , 1. ,

1. , 0. , 1. , 0.5, 1. , 1. , 1. , 1. , 1. , 1. , 0.5, 1. , 0.5,

1. , 0.5, 1. , 0.5, 0.5, 1. , 0.5, 1. , 1. , 1. , 1. , 1. , 0.5,

1. , 1. , 1. , 0.5, 1. , 1. , 1. , 1. , 1. , 1. , 1. , 0.5, 1. ,

1. , 1. , 1. , 0.5, 1. , 1. , 1. , 1. , 0. , 1. , 1. , 0.5, 0.5,

1. , 0.5, 1. , 1. , 1. , 1. , 1. , 1. , 0.5])

HERE

gambling_ts = load_all_task(subjects,'GAMBLING',0)

gambling_task_score = np.zeros(len(gambling_ts))

# array to test accuracy scores

gambling_acc = np.zeros(len(gambling_ts))

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb Cell 84 in <cell line: 1>()

----> <a href='vscode-notebook-cell:/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb#Y162sZmlsZQ%3D%3D?line=0'>1</a> gambling_ts = load_all_task(subjects,'GAMBLING',0)

<a href='vscode-notebook-cell:/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb#Y162sZmlsZQ%3D%3D?line=2'>3</a> gambling_task_score = np.zeros(len(gambling_ts))

<a href='vscode-notebook-cell:/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb#Y162sZmlsZQ%3D%3D?line=4'>5</a> # array to test accuracy scores

NameError: name 'load_all_task' is not defined

for i in range(len(gambling_ts)):

sub_gambling_score = gambling_ts[i]

#print(sub_gambling_score)

#print(sub_gambling_score[0], sub_gambling_score[2])

gamb_time = sub_gambling_score[0][1]

gamb_acc = sub_gambling_score[2][1]

gambling_acc[i] = gamb_acc

#print(gamb_acc)

np.any(gambling_acc == 0)

False

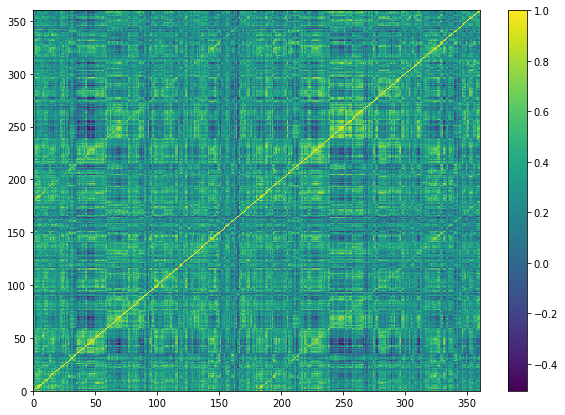

corr_df = pd.DataFrame(emotion_average)

corr_mat = corr_df.corr()

plt.figure(figsize=(10,7))

plt.pcolor(corr_mat)

plt.colorbar()

<matplotlib.colorbar.Colorbar at 0x28c125dc0>

Classifying the personality trait data#

personality_df.head()

| Subject | NEOFAC_A | NEOFAC_O | NEOFAC_C | NEOFAC_N | NEOFAC_E | |

|---|---|---|---|---|---|---|

| 0 | 100307 | 37 | 24 | 35 | 15 | 37 |

| 1 | 100408 | 33 | 29 | 34 | 15 | 33 |

| 2 | 101915 | 35 | 30 | 45 | 8 | 31 |

| 3 | 102816 | 36 | 27 | 32 | 10 | 31 |

| 4 | 103414 | 27 | 30 | 31 | 20 | 34 |

test_personality = pd.DataFrame(personality_df)

test_personality.tail()

| Subject | NEOFAC_A | NEOFAC_O | NEOFAC_C | NEOFAC_N | NEOFAC_E | |

|---|---|---|---|---|---|---|

| 95 | 199655 | 24 | 29 | 33 | 15 | 28 |

| 96 | 200614 | 42 | 22 | 43 | 9 | 38 |

| 97 | 201111 | 36 | 35 | 42 | 31 | 23 |

| 98 | 201414 | 41 | 37 | 37 | 8 | 38 |

| 99 | 205119 | 40 | 30 | 37 | 11 | 33 |

for p in range(3):

aSr = test_personality.iloc[p,1:]

aSr

class_personality = pd.DataFrame()

class_personality.head()

script for loading the personality data and classifying the personality trait scores

score between 0 to 15 - 0 (LOW)

score between 16 to 30 - 1 (AVG)

else (score higher than 30) - 2 (HIGH)

label_list = []

for row_num in range(test_personality.shape[0]):

sub_data = test_personality.iloc[row_num,:]

for value in sub_data[1:]:

if value in range(0,16):

label_list.append(0)

elif value in range(16,31):

label_list.append(1)

else:

label_list.append(2)

label_list.insert(0,sub_data[0])

class_personality = pd.concat([class_personality,pd.Series(label_list)],axis=1)

label_list = []

class_personality.head()

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 100307 | 100408 | 101915 | 102816 | 103414 | 103515 | 103818 | 105115 | 105216 | 106016 | ... | 196144 | 196750 | 197550 | 198451 | 199150 | 199655 | 200614 | 201111 | 201414 | 205119 |

| 1 | 2 | 2 | 2 | 2 | 1 | 1 | 2 | 2 | 1 | 2 | ... | 2 | 2 | 2 | 1 | 2 | 1 | 2 | 2 | 2 | 2 |

| 2 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 2 | 1 | 1 | 1 | 2 | 2 | 1 |

| 3 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 1 | 1 | 2 | ... | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| 4 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 | 0 | ... | 1 | 1 | 2 | 1 | 0 | 0 | 0 | 2 | 0 | 0 |

5 rows × 100 columns

class_personality = class_personality.transpose()

class_personality.columns = personality_df.columns

class_personality.head()

| Subject | NEOFAC_A | NEOFAC_O | NEOFAC_C | NEOFAC_N | NEOFAC_E | |

|---|---|---|---|---|---|---|

| 0 | 100307 | 2 | 1 | 2 | 0 | 2 |

| 0 | 100408 | 2 | 1 | 2 | 0 | 2 |

| 0 | 101915 | 2 | 1 | 2 | 0 | 2 |

| 0 | 102816 | 2 | 1 | 2 | 0 | 2 |

| 0 | 103414 | 1 | 1 | 2 | 1 | 2 |

class_personality.iloc[:25,:]

| Subject | NEOFAC_A | NEOFAC_O | NEOFAC_C | NEOFAC_N | NEOFAC_E | |

|---|---|---|---|---|---|---|

| 0 | 100307 | 2 | 1 | 2 | 0 | 2 |

| 0 | 100408 | 2 | 1 | 2 | 0 | 2 |

| 0 | 101915 | 2 | 1 | 2 | 0 | 2 |

| 0 | 102816 | 2 | 1 | 2 | 0 | 2 |

| 0 | 103414 | 1 | 1 | 2 | 1 | 2 |

| 0 | 103515 | 1 | 1 | 2 | 1 | 1 |

| 0 | 103818 | 2 | 1 | 2 | 1 | 1 |

| 0 | 105115 | 2 | 1 | 1 | 1 | 1 |

| 0 | 105216 | 1 | 1 | 1 | 1 | 1 |

| 0 | 106016 | 2 | 1 | 2 | 0 | 1 |

| 0 | 106319 | 2 | 2 | 1 | 1 | 1 |

| 0 | 110411 | 2 | 1 | 2 | 0 | 2 |

| 0 | 111009 | 2 | 2 | 2 | 0 | 1 |

| 0 | 111312 | 2 | 1 | 2 | 1 | 1 |

| 0 | 111514 | 1 | 2 | 2 | 1 | 1 |

| 0 | 111716 | 0 | 2 | 2 | 0 | 2 |

| 0 | 113215 | 2 | 2 | 2 | 1 | 2 |

| 0 | 113619 | 2 | 1 | 2 | 0 | 2 |

| 0 | 114924 | 1 | 1 | 2 | 1 | 2 |

| 0 | 115320 | 2 | 1 | 1 | 1 | 2 |

| 0 | 117122 | 1 | 1 | 2 | 1 | 0 |

| 0 | 117324 | 2 | 1 | 2 | 0 | 1 |

| 0 | 118730 | 1 | 1 | 2 | 0 | 1 |

| 0 | 118932 | 2 | 2 | 1 | 1 | 1 |

| 0 | 119833 | 1 | 1 | 1 | 1 | 2 |

class_personality.to_csv(r'/Users/rajdeep_ch/Documents/nma/project/personality_data_classified.csv',index=False)

list(np.where(class_personality["Subject"] == '133827'))

[array([], dtype=int64)]

testing = list(class_personality['Subject'])

testing.index(133827)

40

testing.index(192439)

86

class_personality.iloc[40,:]

Subject 133827

NEOFAC_A 2

NEOFAC_O 1

NEOFAC_C 2

NEOFAC_N 0

NEOFAC_E 1

Name: 0, dtype: int64

class_personality.iloc[86,:]

Subject 192439

NEOFAC_A 2

NEOFAC_O 1

NEOFAC_C 2

NEOFAC_N 2

NEOFAC_E 2

Name: 0, dtype: int64

class_personality = class_personality.drop(40)

---------------------------------------------------------------------------

KeyError Traceback (most recent call last)

/Users/rajdeep_ch/Documents/nma/tutorials/Untitled-7.ipynb Cell 118 in <cell line: 1>()

----> <a href='vscode-notebook-cell:/Users/rajdeep_ch/Documents/nma/tutorials/Untitled-7.ipynb#Y364sZmlsZQ%3D%3D?line=0'>1</a> class_personality = class_personality.drop(40)

File ~/opt/anaconda3/lib/python3.9/site-packages/pandas/util/_decorators.py:311, in deprecate_nonkeyword_arguments.<locals>.decorate.<locals>.wrapper(*args, **kwargs)

305 if len(args) > num_allow_args:

306 warnings.warn(

307 msg.format(arguments=arguments),

308 FutureWarning,

309 stacklevel=stacklevel,

310 )

--> 311 return func(*args, **kwargs)

File ~/opt/anaconda3/lib/python3.9/site-packages/pandas/core/frame.py:4954, in DataFrame.drop(self, labels, axis, index, columns, level, inplace, errors)

4806 @deprecate_nonkeyword_arguments(version=None, allowed_args=["self", "labels"])

4807 def drop(

4808 self,

(...)

4815 errors: str = "raise",

4816 ):

4817 """

4818 Drop specified labels from rows or columns.

4819

(...)

4952 weight 1.0 0.8

4953 """

-> 4954 return super().drop(

4955 labels=labels,

4956 axis=axis,

4957 index=index,

4958 columns=columns,

4959 level=level,

4960 inplace=inplace,

4961 errors=errors,

4962 )

File ~/opt/anaconda3/lib/python3.9/site-packages/pandas/core/generic.py:4267, in NDFrame.drop(self, labels, axis, index, columns, level, inplace, errors)

4265 for axis, labels in axes.items():

4266 if labels is not None:

-> 4267 obj = obj._drop_axis(labels, axis, level=level, errors=errors)

4269 if inplace:

4270 self._update_inplace(obj)

File ~/opt/anaconda3/lib/python3.9/site-packages/pandas/core/generic.py:4340, in NDFrame._drop_axis(self, labels, axis, level, errors, consolidate, only_slice)

4338 labels_missing = (axis.get_indexer_for(labels) == -1).any()

4339 if errors == "raise" and labels_missing:

-> 4340 raise KeyError(f"{labels} not found in axis")

4342 if is_extension_array_dtype(mask.dtype):

4343 # GH#45860

4344 mask = mask.to_numpy(dtype=bool)

KeyError: '[40] not found in axis'

help(load_all_timeseries)

Help on function load_all_timeseries in module __main__:

load_all_timeseries(subject_list, experiment_name, run_num)

Reads the timeseries data of all subjects contained in the subject list for a given experiment

and given run.

Inputs:

subject_list (list): List containing subject ids

experiment_name (str): Name of task under consideration

run_num (int): Number of run task

Return:

data_array (ndarray): timeseries data of all subjects

shape of data returned : (100,360,timepoints)

help(load_all_evs)

Help on function load_all_evs in module __main__:

load_all_evs(subject_list, experiment_name, run_num)

Reads the evs data for all subjects in subject list across all task conditions

Inputs:

subject_list (list): List containing subject ids

experiment_name (str): Name of task under consideration

run_num (int): Number of run task

Returns:

data_array (ndarray): explanatory variables (ev) for all subjects

help(all_average_frames)

Help on function all_average_frames in module __main__:

all_average_frames(subject_list, timeseries_data, evs_data, experiment_name, task_cond)

The function takes in the brain activity measures and behavioural measures for all subjects in a given task condition.

The function returns the brain activity average across all trail points.

The corresponding average contains the time-average brain activity across all ROIs

Input:

timeseries_data (ndarray): Contains the timeseries data for all subjects in a given experiment

evs_data (ndarray): Contains the evs data for all subject

experiment_name (str): Name of the experiment (EMOTION/SOCIAL)

task_condition (str): string name for task condition (fear/neut)

Returns:

average_values (ndarray): time-average activation across all ROIs

shape = (number of subjects, ROIs , 1)

help(load_all_task_score)

Help on function load_all_task_score in module __main__:

load_all_task_score(subject_list, experiment_name, run_num)

Load task performance statistics for one task experiment.

Args:

subject_list (str): subject IDs to load

experiment_name (str) : Name of experiment

run_num (int): 0 or 1

Returns

task_scores (list of lists): A list of task performance scores for all subjects in a particular run

emotion_timeseries = load_all_timeseries(subjects,'EMOTION',0)

emotion_evs = load_all_evs(subjects,'EMOTION',0)

emotion_average = all_average_frames(emotion_timeseries,emotion_evs,'EMOTION','f')

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb Cell 117 in <cell line: 3>()

<a href='vscode-notebook-cell:/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb#Y246sZmlsZQ%3D%3D?line=0'>1</a> emotion_timeseries = load_all_timeseries(subjects,'EMOTION',0)

<a href='vscode-notebook-cell:/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb#Y246sZmlsZQ%3D%3D?line=1'>2</a> emotion_evs = load_all_evs(subjects,'EMOTION',0)

----> <a href='vscode-notebook-cell:/Users/rajdeep_ch/Documents/nma/tutorials/project_main.ipynb#Y246sZmlsZQ%3D%3D?line=2'>3</a> emotion_average = all_average_frames(emotion_timeseries,emotion_evs,'EMOTION','f')

TypeError: all_average_frames() missing 1 required positional argument: 'task_cond'

emotion_ts = load_all_task_score(subjects,'EMOTION',0)

emotion_face_score = np.zeros(len(emotion_ts))

emotion_shape_score = np.zeros(len(emotion_ts))

avoid_subjects = ['133827', '192439']

# loop running over all participants

for i in range(len(emotion_ts)):

sub_task_vals = emotion_ts[i]

#print(sub_task_vals[0][1],sub_task_vals[2][1])

#print(sub_task_vals[1][1],sub_task_vals[3][1])

if subjects[i] not in avoid_subjects:

face_acc, face_time = sub_task_vals[0][1], sub_task_vals[2][1]

ies_face = face_time / (100 * face_acc)

emotion_face_score[i] = ies_face

shape_acc, shape_time = sub_task_vals[1][1], sub_task_vals[3][1]

ies_shape = shape_time / (100 * shape_acc)

emotion_shape_score[i] = ies_shape

else:

emotion_face_score[i] = -1

emotion_shape_score[i] = -1

# test

## emotion_acc_val1[i] = face_acc

###shape_acc, shape_time = sub_task_vals[1][1], sub_task_vals[3][1]

# test

## emotion_acc_val2[i] = shape_acc

#print("Score for shape: {}".format(shape_time,shape_acc),end="\n")

#print("Score for face: {}".format(face_time / face_acc))

#emotion_face_score[i] = (face_time / face_acc) / 100

# (100 / 100) does not produce needed result

# need to convert the value to percentage

# use multi-nomial LR

#emotion_shape_score[i] = (shape_time/ shape_acc) / 100

emotion IES for condition 1 - emotion_face_score

emotion IES for condition 2 - emotion_shape_score

social_ts = load_all_task_score(subjects,'SOCIAL',0)

social_mental_score = np.zeros(len(social_ts))

social_random_score = np.zeros(len(social_ts))

for i in range(len(social_ts[:])):

sub_social_score = social_ts[i]

#print(sub_social_score)

# mental reaction time; mental accuracy

#print(sub_social_score[0],sub_social_score[2])

# random reaction time; random accracy

#print(sub_social_score[1],sub_social_score[3],end="\n")

if subjects[i] not in avoid_subjects:

mental_rt, mental_acc = sub_social_score[0][1], sub_social_score[2][1]

ies_mental = mental_rt / (100 * mental_acc)

print("MENTAL RT: {} | MENTAL ACC : {}| MENTAL IES: {}".format(mental_rt,mental_acc,ies_mental))

social_mental_score[i] = ies_mental

print(social_mental_score[i])

random_rt, random_acc = sub_social_score[1][1], sub_social_score[3][1]

ies_random = random_rt / (100 * random_acc)

social_random_score[i] = ies_random

else:

social_mental_score[i] = -1

social_random_score[i] = -1

# test for mental_acc

#social_acc_val1[i] = mental_acc

#random_rt, random_acc = sub_social_score[1][1], sub_social_score[3][1]

# test for random_acc

#social_acc_val2[i] = random_acc

# zero division error since mental_acc == 0

#social_mental_score[i] = mental_rt / mental_acc

#social_random_score[i] = random_rt / random_acc

#print('MENTAL: {}'.format(mental_rt / mental_acc))

#print("RANDOM: {}".format(random_rt / random_acc),end="\n\n")

MENTAL RT: 479.0 | MENTAL ACC : 1.0| MENTAL IES: 4.79

4.79

MENTAL RT: 1059.33333333 | MENTAL ACC : 1.0| MENTAL IES: 10.5933333333

10.5933333333

MENTAL RT: 1193.0 | MENTAL ACC : 1.0| MENTAL IES: 11.93

11.93

MENTAL RT: 1189.0 | MENTAL ACC : 1.0| MENTAL IES: 11.89

11.89

MENTAL RT: 738.666666667 | MENTAL ACC : 1.0| MENTAL IES: 7.386666666669999

7.386666666669999

MENTAL RT: 720.5 | MENTAL ACC : 0.666666666667| MENTAL IES: 10.807499999994597

10.807499999994597

MENTAL RT: 914.0 | MENTAL ACC : 1.0| MENTAL IES: 9.14

9.14

MENTAL RT: 914.0 | MENTAL ACC : 1.0| MENTAL IES: 9.14

9.14

MENTAL RT: 712.666666667 | MENTAL ACC : 1.0| MENTAL IES: 7.126666666669999

7.126666666669999

MENTAL RT: 680.333333333 | MENTAL ACC : 1.0| MENTAL IES: 6.80333333333

6.80333333333

MENTAL RT: 1181.66666667 | MENTAL ACC : 1.0| MENTAL IES: 11.8166666667

11.8166666667

MENTAL RT: 862.0 | MENTAL ACC : 1.0| MENTAL IES: 8.62

8.62

MENTAL RT: 1148.66666667 | MENTAL ACC : 1.0| MENTAL IES: 11.4866666667

11.4866666667

MENTAL RT: 524.666666667 | MENTAL ACC : 1.0| MENTAL IES: 5.2466666666699995

5.2466666666699995

MENTAL RT: 776.666666667 | MENTAL ACC : 1.0| MENTAL IES: 7.76666666667

7.76666666667

MENTAL RT: 683.0 | MENTAL ACC : 1.0| MENTAL IES: 6.83

6.83

MENTAL RT: 797.0 | MENTAL ACC : 0.333333333333| MENTAL IES: 23.910000000023906

23.910000000023906

MENTAL RT: 939.666666667 | MENTAL ACC : 1.0| MENTAL IES: 9.396666666669999

9.396666666669999

MENTAL RT: 705.0 | MENTAL ACC : 0.666666666667| MENTAL IES: 10.574999999994713

10.574999999994713

MENTAL RT: 591.0 | MENTAL ACC : 1.0| MENTAL IES: 5.91

5.91

MENTAL RT: 827.333333333 | MENTAL ACC : 1.0| MENTAL IES: 8.273333333330001

8.273333333330001

MENTAL RT: 707.0 | MENTAL ACC : 1.0| MENTAL IES: 7.07

7.07

MENTAL RT: 856.666666667 | MENTAL ACC : 1.0| MENTAL IES: 8.566666666669999

8.566666666669999

MENTAL RT: 658.666666667 | MENTAL ACC : 1.0| MENTAL IES: 6.586666666669999

6.586666666669999

MENTAL RT: 865.666666667 | MENTAL ACC : 1.0| MENTAL IES: 8.656666666669999

8.656666666669999

MENTAL RT: 984.0 | MENTAL ACC : 1.0| MENTAL IES: 9.84

9.84

MENTAL RT: 849.333333333 | MENTAL ACC : 1.0| MENTAL IES: 8.49333333333

8.49333333333

MENTAL RT: 1058.0 | MENTAL ACC : 1.0| MENTAL IES: 10.58

10.58

MENTAL RT: 457.666666667 | MENTAL ACC : 1.0| MENTAL IES: 4.57666666667

4.57666666667

MENTAL RT: 833.0 | MENTAL ACC : 1.0| MENTAL IES: 8.33

8.33

MENTAL RT: 1255.0 | MENTAL ACC : 0.333333333333| MENTAL IES: 37.65000000003764

37.65000000003764

MENTAL RT: 806.0 | MENTAL ACC : 1.0| MENTAL IES: 8.06

8.06

MENTAL RT: 767.666666667 | MENTAL ACC : 1.0| MENTAL IES: 7.676666666669999

7.676666666669999

MENTAL RT: 762.333333333 | MENTAL ACC : 1.0| MENTAL IES: 7.623333333330001

7.623333333330001

MENTAL RT: 856.333333333 | MENTAL ACC : 1.0| MENTAL IES: 8.56333333333

8.56333333333

MENTAL RT: 789.666666667 | MENTAL ACC : 1.0| MENTAL IES: 7.89666666667

7.89666666667

MENTAL RT: 707.333333333 | MENTAL ACC : 1.0| MENTAL IES: 7.073333333330001

7.073333333330001

MENTAL RT: 605.0 | MENTAL ACC : 1.0| MENTAL IES: 6.05

6.05

MENTAL RT: 647.0 | MENTAL ACC : 1.0| MENTAL IES: 6.47

6.47

MENTAL RT: 797.666666667 | MENTAL ACC : 1.0| MENTAL IES: 7.97666666667

7.97666666667

MENTAL RT: 988.333333333 | MENTAL ACC : 1.0| MENTAL IES: 9.88333333333

9.88333333333

MENTAL RT: 991.0 | MENTAL ACC : 1.0| MENTAL IES: 9.91

9.91

MENTAL RT: 546.5 | MENTAL ACC : 0.666666666667| MENTAL IES: 8.197499999995902

8.197499999995902

MENTAL RT: 966.333333333 | MENTAL ACC : 1.0| MENTAL IES: 9.66333333333

9.66333333333

MENTAL RT: 578.666666667 | MENTAL ACC : 1.0| MENTAL IES: 5.7866666666699995

5.7866666666699995

MENTAL RT: 730.5 | MENTAL ACC : 0.666666666667| MENTAL IES: 10.957499999994521

10.957499999994521

MENTAL RT: 557.333333333 | MENTAL ACC : 1.0| MENTAL IES: 5.573333333330001

5.573333333330001

MENTAL RT: 742.0 | MENTAL ACC : 1.0| MENTAL IES: 7.42

7.42

MENTAL RT: 702.666666667 | MENTAL ACC : 1.0| MENTAL IES: 7.02666666667

7.02666666667

MENTAL RT: 912.0 | MENTAL ACC : 1.0| MENTAL IES: 9.12

9.12

MENTAL RT: 1022.0 | MENTAL ACC : 1.0| MENTAL IES: 10.22

10.22

MENTAL RT: 744.333333333 | MENTAL ACC : 1.0| MENTAL IES: 7.44333333333

7.44333333333

MENTAL RT: 724.0 | MENTAL ACC : 0.666666666667| MENTAL IES: 10.85999999999457

10.85999999999457

MENTAL RT: 1204.33333333 | MENTAL ACC : 1.0| MENTAL IES: 12.0433333333

12.0433333333

MENTAL RT: 2141.66666667 | MENTAL ACC : 1.0| MENTAL IES: 21.4166666667

21.4166666667

MENTAL RT: 1458.33333333 | MENTAL ACC : 1.0| MENTAL IES: 14.583333333299999

14.583333333299999

MENTAL RT: 759.333333333 | MENTAL ACC : 1.0| MENTAL IES: 7.59333333333

7.59333333333

MENTAL RT: 1031.33333333 | MENTAL ACC : 1.0| MENTAL IES: 10.3133333333

10.3133333333

MENTAL RT: 549.666666667 | MENTAL ACC : 1.0| MENTAL IES: 5.4966666666699995

5.4966666666699995

MENTAL RT: 647.0 | MENTAL ACC : 1.0| MENTAL IES: 6.47

6.47

MENTAL RT: 926.333333333 | MENTAL ACC : 1.0| MENTAL IES: 9.263333333330001

9.263333333330001

MENTAL RT: 841.333333333 | MENTAL ACC : 1.0| MENTAL IES: 8.41333333333

8.41333333333

MENTAL RT: 756.5 | MENTAL ACC : 0.666666666667| MENTAL IES: 11.347499999994326

11.347499999994326

MENTAL RT: 1367.66666667 | MENTAL ACC : 1.0| MENTAL IES: 13.676666666700001

13.676666666700001

MENTAL RT: 555.333333333 | MENTAL ACC : 1.0| MENTAL IES: 5.55333333333

5.55333333333

MENTAL RT: 573.333333333 | MENTAL ACC : 1.0| MENTAL IES: 5.73333333333

5.73333333333

MENTAL RT: 1001.66666667 | MENTAL ACC : 1.0| MENTAL IES: 10.0166666667

10.0166666667

MENTAL RT: 706.666666667 | MENTAL ACC : 1.0| MENTAL IES: 7.06666666667

7.06666666667

MENTAL RT: 543.333333333 | MENTAL ACC : 1.0| MENTAL IES: 5.43333333333

5.43333333333

MENTAL RT: 571.0 | MENTAL ACC : 0.666666666667| MENTAL IES: 8.564999999995718

8.564999999995718

MENTAL RT: 662.0 | MENTAL ACC : 1.0| MENTAL IES: 6.62

6.62

MENTAL RT: 759.666666667 | MENTAL ACC : 1.0| MENTAL IES: 7.59666666667

7.59666666667

MENTAL RT: 1313.0 | MENTAL ACC : 1.0| MENTAL IES: 13.13

13.13

MENTAL RT: 694.0 | MENTAL ACC : 1.0| MENTAL IES: 6.94

6.94

MENTAL RT: 818.333333333 | MENTAL ACC : 1.0| MENTAL IES: 8.183333333330001

8.183333333330001

MENTAL RT: 1002.33333333 | MENTAL ACC : 1.0| MENTAL IES: 10.0233333333

10.0233333333

MENTAL RT: 940.666666667 | MENTAL ACC : 1.0| MENTAL IES: 9.406666666669999

9.406666666669999

MENTAL RT: 620.666666667 | MENTAL ACC : 1.0| MENTAL IES: 6.206666666669999

6.206666666669999

MENTAL RT: 1090.5 | MENTAL ACC : 0.666666666667| MENTAL IES: 16.357499999991823

16.357499999991823

MENTAL RT: 918.666666667 | MENTAL ACC : 1.0| MENTAL IES: 9.18666666667

9.18666666667

MENTAL RT: 787.0 | MENTAL ACC : 1.0| MENTAL IES: 7.87

7.87